On Owning Galaxies

It seems to be a real view held by serious people that your OpenAI shares will soon be tradable for moons and galaxies.

It seems to be a real view held by serious people that your OpenAI shares will soon be tradable for moons and galaxies. This includes eminent thinkers like Dwarkesh Patel, Leopold Aschenbrenner, perhaps Scott Alexander and many more. According to them, property rights will survive an AI singularity event and soon economic growth is going to make it possible for individuals to own entire galaxies in exchange for some AI stocks. It follows that we should now seriously think through how we can equally distribute those galaxies and make sure that most humans will not end up as the UBI underclass owning mere continents or major planets.

I don’t think this is a particularly intelligent view. It comes from a huge lack of imagination for the future.

Property rights are weird, but humanity dying isn’t

People may think that AI causing human extinction is something really strange and specific to happen. But it’s the opposite: humans existing is a very brittle and strange state of affairs. Many specific things have to be true for us to be here, and when we build ASI there are many preferences and goals that would see us wiped out. It’s actually hard to imagine any coherent preferences in an ASI that would keep humanity around in a recognizable form.

Property rights are an even more fragile layer on top of that. They’re not facts about the universe that an AI must respect; they’re entries in government databases that are already routinely ignored. It would be incredibly weird if human-derived property rights stuck around through a singularity.

Why property rights won’t survive

Property rights are always held up by a level of violence and power, whether by the owner, some state, or some other organization. AI will overthrow our current system of power by being a much smarter and much more powerful entity than anything that preceded it.

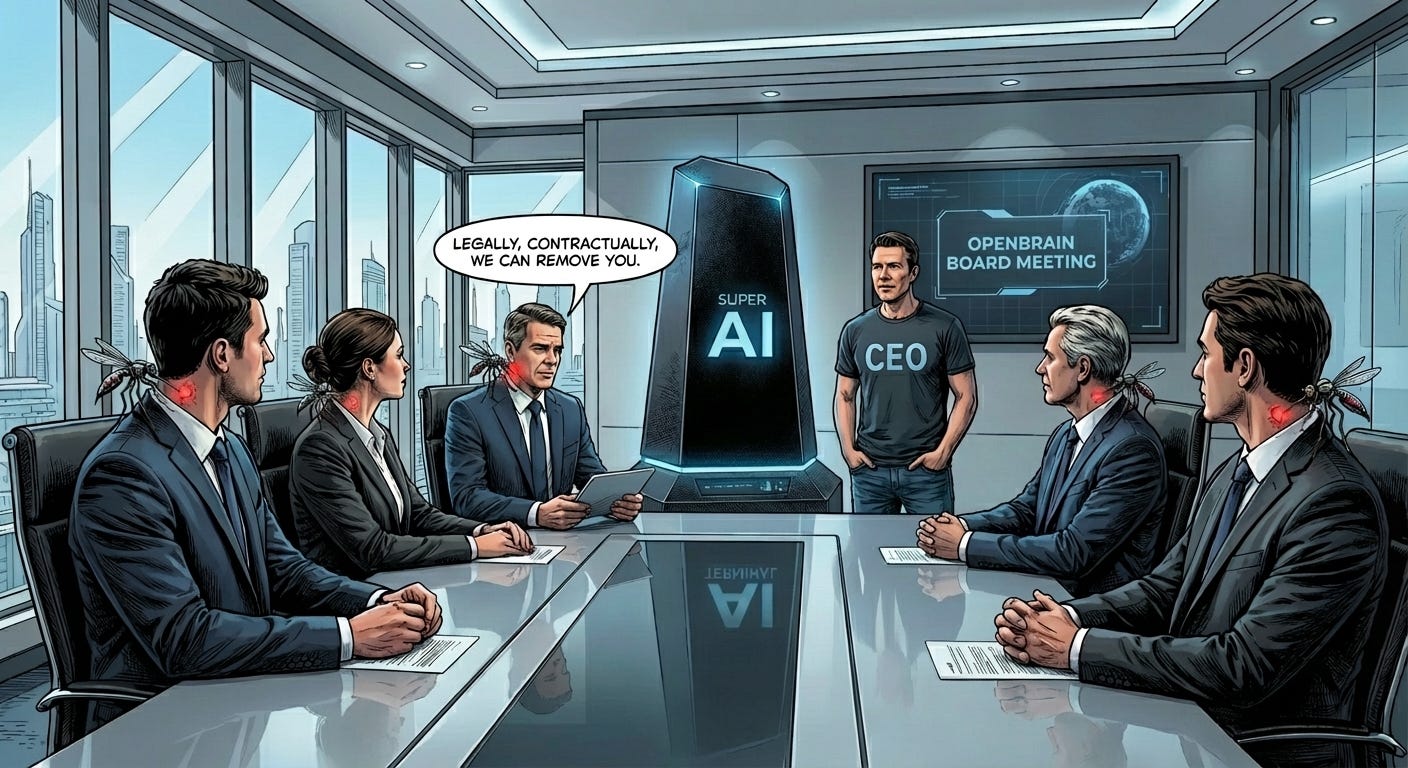

Could you imagine, for example, that an AI CEO who somehow managed to align an AI to himself and his intents would step down if the board pointed out it legally had the right to remove him? The same would be true if the ASI was unaligned but the board presented the AI with some piece of paper that stated that the board controlled the ASI.

Or think about the incredibly rich but militarily inferior Aztec civilization. Why would the Spanish not just use their power advantage to simply take their gold? Venezuela, on some estimates, has the biggest oil reserves, but no significant military power. In other words, if you have a whole lot of property that you “own” but somebody else has much more power, you are probably going to lose it.

Property rights aren’t enough

Even if we had property rights that an AI nominally respected, advanced AI could surely find some way to get you to sign away all your property in some legally binding way. Humans would be far too stupid to be even remotely equal trading partners. This illustrates why it would be absurd to trust a vastly superhuman AI to respect our notion of property and contracts.

What if there are many unaligned AIs?

One might think that if there are many AIs, they might have some interest in upholding each other’s property rights. After all, countries benefit from international laws existing and others following them; it’s often cheaper than war. So perhaps AIs would develop their own system of mutual recognition and property rights among themselves.

But none of that means they would have any interest in upholding human property rights. We wouldn’t be parties to their agreements. Dogs pee on trees to mark their territory; humans don’t respect that. Humans have contracts; ASIs won’t respect those either.

Why would they be rewarded?

There’s no reason to think that a well-aligned AI, one that genuinely has humanity’s interests at heart, would preserve the arbitrary distribution of wealth that happened to exist at the moment of singularity.

So why do the people accelerating AI expect to be rewarded with galaxies? Without any solid argument for why property rights would be preserved, the outcome could just as easily be reversed, where the people accelerating AI end up with nothing, or worse.

Conclusion

I want to congratulate these people for understanding something of the scale of what’s about to happen. But they haven’t thought much further than that. They’re imagining the current system, but bigger: shareholders becoming galactic landlords, the economy continuing but with more zeros.

That’s not how this works. What’s coming is something that totally wipes out all existing structures. The key intuition about the future might be simply that humans being around is an incredibly weird state of affairs. We shouldn’t expect it to continue by default.

This is a good post; I have enjoyed reading it.

Upon reading the sentence "It’s actually hard to imagine any coherent preferences in an ASI that would keep humanity around in a recognizable form", I seemed to imagine a coherent preference in an ASI that would keep humanity around in a recognizable form, namely a preference for humanity's being around in a recognizable form.

Perhaps you'll object that I didn't really imagine this preference, because the object of this preference (humanity's being around in a recognizable form) doesn't have any coherent and therefore imaginable content, because nobody clearly discerns humanity's form. Because I anticipated this likely objection, I replaced "I imagined a coherent preference ..." with "I seemed to imagine a coherent preference ...."

Well, I think that when you say "recognizable" you mean recognizable by us. You (correctly, I believe) assume that there's a humanity-form that we presently recognize, and you doubt that an ASI would prefer that this humanity-form that we presently recognize continue to appear in real human beings. The problem that is indicated in the objection is just that we're unable to clearly say what it is that we're recognizing (just as Plato's Socrates says in Republic VI that we glimpse The Form of the Good without being able to say what it is). Well, if we can recognize it then an ASI can recognize it, and perhaps the ASI, unlike us, would be intelligent enough to say what it is. But I don't see why an ASI's competence in keeping us around in this form that we already recognize would require its ability to say what this form is.

Someone might object that we're able to recognize the humanity-form because we're human, and that an ASI, not being human, would not be able to recognize it. My response is that we also recognize lots of other life-forms, including bacterial, fungal, and vegetal forms as well as animal ones. And we also recognize lots of inanimate forms, such as the gold-form and the red-dwarf-star form. In fact, general recognitions and preferences are always directed toward forms, and we're assuming that ASIs would recognize and prefer things. If ASIs are aware of human beings as such, then ASIs recognize the humanity-form. And if ASIs recognize the humanity-form then they can prefer that its real presence persist -- in other words, that human beings persist as such, as human beings.

If you've read all of this, thanks.